Meta has taken down several advertisements promoting “nudify” apps—AI programs designed to generate sexually explicit deepfakes using images of real individuals—following a CBS News investigation that uncovered numerous such ads on its platforms.

“We enforce strict policies against non-consensual intimate imagery; we have removed these ads, shut down the pages responsible for them, and permanently blocked the related URLs,” a Meta representative stated via email to CBS News.

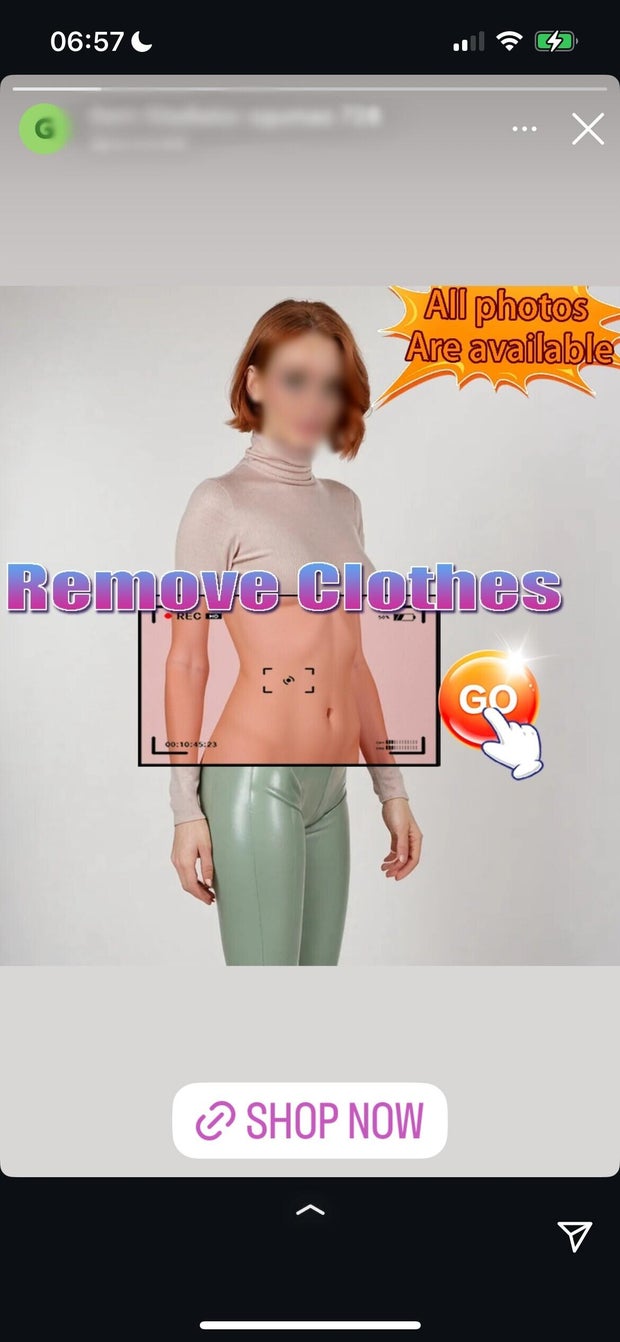

The CBS News investigation revealed a multitude of these ads on Meta’s Instagram, particularly in the “Stories” feature. Many of the ads touted AI tools that allowed users to “upload a photo” and “see anyone naked.” Other ads promoted video manipulation features involving real people. One ad even questioned, “how is this filter even allowed?” below an example of a nude deepfake.

One advertisement showcased its AI product using highly sexualized, underwear-clad deepfake images of actresses Scarlett Johansson and Anne Hathaway. Many ad URLs led to websites that marketed the ability to animate real people’s images into performing sexual acts, with some applications charging users between $20 and $80 for “exclusive” and “advanced” features. Other ads directed users to Apple’s app store, where “nudify” apps were accessible for download.

An examination of Meta’s ad library revealed at least hundreds of such advertisements across its social media platforms, including Facebook, Instagram, Threads, the Facebook Messenger app, and Meta Audience Network—a service that enables advertisers to reach users on affiliated mobile apps and websites.

Meta’s own Ad Library data indicates that many of these ads primarily targeted men aged 18 to 65, and were running in the United States, European Union, and the United Kingdom.

A Meta spokesperson acknowledged to CBS News that the proliferation of this type of AI-generated content is a persistent issue, presenting increasingly complex challenges for the company.

“The creators of these exploitative apps consistently adapt their methods to avoid detection, which compels us to continually enhance our enforcement efforts,” the spokesperson remarked.

Despite Meta’s removal of initially flagged ads, CBS News discovered that ads promoting “nudify” deepfake tools remained visible on Instagram.

Deepfakes refer to altered images, audio clips, or videos of real individuals that utilize artificial intelligence to misrepresent what someone actually said or did.

Recently, President Trump signed the bipartisan “Take It Down Act,” which mandates that websites and social media firms eliminate deepfake content within 48 hours upon notification from a victim.

While the law prohibits the “knowing publication” or threats to publish intimate images without a person’s consent, including AI-generated deepfakes, it does not target the tools used for their creation.

These tools indeed violate platform safety and moderation policies enforced by both Apple and Meta on their respective platforms.

Meta’s advertising standards state that “ads must not contain adult nudity or sexual activity. This includes nudity, depictions of individuals in explicit or sexually suggestive poses, or activities that are sexually suggestive.”

Meta’s “bullying and harassment” policy likewise prohibits “derogatory sexualized photoshopped images or drawings” on its platforms, emphasizing efforts to prevent the sharing or threats of sharing non-consensual intimate imagery.

Apple’s app store guidelines explicitly ban “content that is offensive, insensitive, upsetting, intended to disgust, in exceptionally poor taste, or just plain creepy.”

Alexios Mantzarlis, director of the Security, Trust, and Safety Initiative at Cornell University’s technology research center, has been studying the rise of AI deepfake advertisements on social media for over a year. In a phone interview with CBS News, he noted a significant increase in such ads on Meta’s platforms and others like X and Telegram.

While he acknowledged the structural “lawlessness” on platforms like Telegram and X that allows such content to proliferate, he expressed skepticism regarding Meta’s commitment to addressing the issue, despite the presence of content moderators.

“I believe that trust and safety teams within these companies genuinely care, but I don’t think that the top leadership at Meta shares the same level of concern,” he commented. “It’s evident that they are under-resourcing the teams tasked with combating these issues, given the sophistication of these [deepfake] networks … they can’t match Meta’s financial resources to tackle it.”

Mantzarlis further uncovered that “nudify” deepfake generators are available for download on both Apple’s app store and Google’s Play store, voicing his frustration over these large platforms’ failure to enforce their content policies.

“The issue with apps is that they present a dual-use front, marketing themselves on the app store as entertaining ways to swap faces,

However, they are promoting themselves on Meta primarily for nudification purposes. As a result, when these applications are evaluated in the Apple or Google stores, they often lack the necessary resources to ban them,” he mentioned.

“It is essential to have cooperation across industries; if an app or website advertises itself as a nudification tool anywhere online, everyone else should have the authority to say, ‘I don’t care how you present yourself on my platform, you are gone,'” Mantzarlis emphasized.

CBS News has reached out to Apple and Google to inquire about their moderation practices. As of the time this was written, neither company had provided a response.

The endorsement of such applications by major tech firms raises significant concerns regarding user consent and online safety for young individuals. A CBS News analysis of one “nudify” website advertised on Instagram revealed that the site did not require any form of age verification before allowing users to upload images to create deepfake pictures.

This is a widespread issue. In December, CBS News’ 60 Minutes highlighted the absence of age verification on one of the most frequently visited sites that uses artificial intelligence to produce fake nude images of actual people.

Even though users were informed that they had to be 18 or older to access the site and that “processing of minors is impossible,” 60 Minutes could gain immediate access to upload images just by clicking “accept” on the age warning prompt, without any further age checks needed.

Research indicates that a significant number of underage teens have encountered deepfake content. A study by the children’s protection nonprofit Thorn conducted in March 2025 found that among teens, 41% had heard of the term “deepfake nudes,” with 10% personally knowing someone who had had deepfake nude images made of them.